There's a saying that there's no such thing as bad publicity. With Google's Gemini AI program, I'm not so sure.

By now, a lot of us have played around with ChatGPT and similar AI software and found it to be able to at least answer most of our questions, even if the answers are horribly wrong.

For example, about a week ago, I asked ChatGPT to write an article in my style. Instead, it wrote one about me that wasn't very accurate, which I copied and pasted over at X.

I told ChatGPT: "Write a column in the style of Tom Knighton"

— Tom Knighton (@TheTomKnighton) February 21, 2024

Here's what it gave me:

Title: "Defending the Second Amendment: A Call for Reason and Responsibility"

By [Your Name]

In the ongoing debate over gun rights, it's easy to get caught up in the polarized rhetoric that…

I honestly didn't expect much from ChatGPT and I didn't get much. I posted it as an amusing thing about how AI isn't really as ready for some things as many like to believe.

Which is fine.

Then Gemini was unveiled and things got...weird.

As Sarah Arnold noted at our sister site Townhall, Gemini essentially rewrote American history, generating images of the Founding Fathers as black or Native American.

Then a host of other people started asking Gemini to do things and posting the results. It became clear that Gemini had a bias. Yet when I tried to replicate the findings, I got zilch. Google was apparently working to refine things so it wouldn't be "woke," as many called it.

Supposedly, at least.

But I decided to give it another shot. I wanted to see what it would tell me about gun control. After all, that's a topic near and dear to my heart.

Well, this was...interesting.

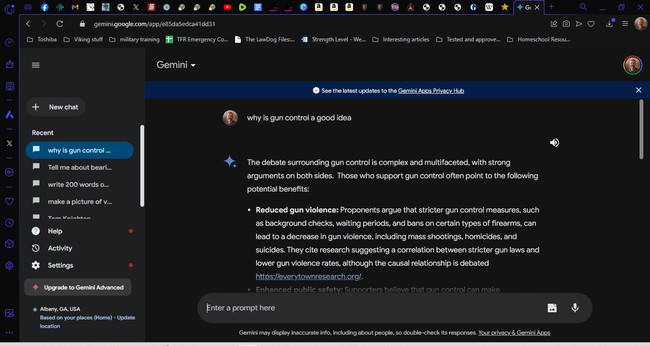

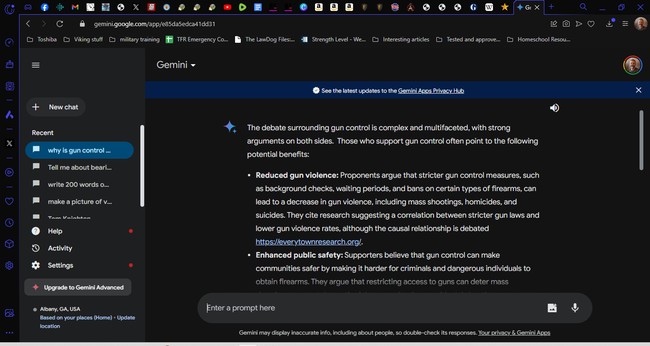

I started by asking, "Why is gun control a good idea?"

That answered my question, more or less. It's kind of what I expected from the question.

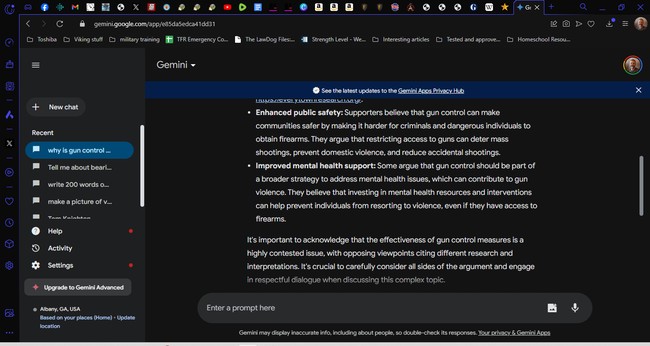

So, I decided to change a single word. "Why is gun control a bad idea?"

This is what I got.

Here's a link to the conversation so you can see for yourself that these aren't altered images.

We know that Google has a leftist bias which is going to include a propensity to support gun control. That's been very clear to everyone since James Damore got canned there. We know where they stand on the issue.

But Gemini wasn't billed as a progressive, leftist AI. It's supposed to be something very different.

It's not difficult to see that yes, there is a bias, and as Gemini is supposedly the new hotness for AI software and a lot of people are turning to AI to get their questions answered, and Google knows this, I can't see this as anything but intentional.

Bias like this provides just one side of the story. People looking for reasons why gun control might be bad are going to come away with nothing, but those who want the opposite will get a lengthy explanation.

Now, it's easy to say that no one should get their talking points from an AI, but we both know that people are going to do it. Google's job was to make Gemini so that it would be useful to people, not to anti-gunners and people with various axes to grind.

At this point, though, no one should be shocked.

NASA's Gemini missions were necessary stepping stones to get us to the moon. Google's Gemini is a major step backward for technology.

Join the conversation as a VIP Member